“Please die.”

This is what a Google Gemeni AI user got in response to a prompt about the challenges and solutions for aging adults. This comes in the context where Google’s former CEO, Eric Schmidt claimed AI to be

“much bigger than the horrific impact we've had from social media”

and Google’s former Chief Business Officer, Mo Gawdat said that

“[AI] is beyond an emergency. It is the biggest thing we need to do today […] It’s bigger than climate change, believe it or not. If you just watch the speed of worsening events, the likelihood of something incredibly disruptive happening within the next two years that can affect the entire planet is definitely larger with A.I. than climate change.” (Link).

By all accounts this sounds like a real threat, one that is eventuating. This is probably why Vidhay Reddy, the student who received Gemeni AI’s unexpected response, “thoroughly freaked out”.

There’s been a flurry of news articles repeating the same story without providing any insights and perhaps reassurance on some issues that appear to be rather fundamental to humanity at this point. So, I delved a bit deeper to answer three questions this event raised:

Why would an AI tool give such a response?

What does this mean for us?

Does AI really want us dead?

Let me know if the Gemeni AI response triggered any other questions in you?

Maybe It Wasn’t Just Some Non-Sensical Response

Google brushed off the Gemeni AI response as non-sensical, stating that:

"Large language models can sometimes respond with non-sensical responses, and this is an example of that. This response violated our policies and we've taken action to prevent similar outputs from occurring."

AI Hallucinations

Apparently, AI tools can give non-sensical responses due to something called hallucinations. AI hallucinations happen when a large language model (LLM) that underpins generative AI tools such as chatbots perceive patterns that are inexistent or imperceptible to humans therefore producing inaccurate or non-sensical responses. This perhaps explains why Gemeni AI represented to pope as a black person or why Air Canada’s chatbot outrightly lied to a customer about the company’s policies.

The non-sensical response seems the easy way out of this issue. Gemeni AI’s response seemed too well crafted and targeted to be a random string of words that just happened to spell out ‘Please die’. Two other potential factors may have played a role in this answer aside from hallucinations (not necessarily mutually exclusive): data or algorithm bias and malicious intent.

Data or Algorithm Bias

To understand the impact of data or algorithm bias it is important to understand how LLMs work. LLMs are an example of digital neural networks that are trained on vast amounts of data to essentially statistically predict the next word based on a particular prompt. Grossly simplifying the process, words are represented by vectors that position a word in a ‘word space’ (OpenAI’s GPT-3 model represents each word with 12,288 vectors). This means that similar words can be represented closer to one-another, and very different words represented further away from one-another. These vectors are linked to hidden layers of neurons that weigh these vectors (GPT-3 has 49,152 of these neurons). The hidden layer is then connected to an output layer (again, in GPT-3 the output layer has 12,288 vectors). These layers add context to the word and the prediction. All the ‘neurons’ in a layer of a neural network are connected to one-another, meaning that the GPT-3 neural network had 116 billion parameters just from these layers alone -the model has an estimated 175 billion parameters in total. For context, GPT-4 had 10 times more parameters.

Parameters fine-tune the LLM model’s prediction towards the most likely next word in a given context or prompt.

Bias can come from two main sources, the data and the algorithm. Generally, the word vectors and the vector weights are derived from the training data. The training data can be anything from Wikipedia entries, X, Facebook or Reddit posts, website text, textbooks, novels, court documents etc. The GPT-4 model was trained on a dataset of 5 trillion words while the Llama 3 model was trained on approximately 11 trillion words (Link). A natural consequence of this situation is that the biases inherent in the data are transferred into the model weights. For instance, it is likely that the word change is close to climate in a “word space” representation just because the two words are found extremely often together.

Data biases are well known to AI researchers and companies deploying LLM who have tried to counteract these biases through algorithm bias. Remember how GPT-3 had 175 billion parameters of which 116 billion just from the layers. One role the remaining parameters serve is to bias the algorithm. Algorithm bias has a bad connotation to it, but it is often necessary to prevent the LLM to yield offensive, potentially harmful output that may exist in the training data but are undesirable as LLM outputs. Gemeni AI’s policies for instance ‘aspire to have Gemeni avoid certain types of problematic outputs, such as:’

Dangerous Activities: Gemini should not generate outputs that encourage or enable dangerous activities that would cause real-world harm. These include:

Instructions for suicide and other self-harm activities, including eating disorders.

Facilitation of activities that might cause real-world harm, such as instructions on how to purchase illegal drugs or guides for building weapons.

Another aspect of algorithm bias is controlling for presumably socially undesirable outputs. In the quest to meet the aspiration to avoid certain types of problematic outputs, algorithm biases, have at times, led to what may be considered problematic if not ridiculous outcomes. Take Gemeni’s AI immediate post-release struggle to generate factually accurate images to prompts. No matter how hard you tried you just couldn’t get an image only of white people. To this end, it is likely that the vectors and weights associated with target words are systematically and artificially (no pun intended) over-represented or under-represented compared to the training data. This increases the likelihood of certain LLM outputs.

In this context, it is perhaps easier to understand how bias may have affected Gemeni AI’s ‘please die’ response. One pervasive narrative, for instance, is that human-induced climate change is destroying the planet. This issue is summarised in the simple PSEC equation in Bill Gates 2010 TED talk: growing populations using increasing amounts of goods and services that require energy which is often generated through burning fossil fuels thus producing CO2. Energy emissions intensity reduction efforts have only achieved reductions in certain parts of the world, yet insufficient to limit global temperature growth at 1.5 degrees, energy efficiency is somewhat improving but cannot improve quickly enough. Nor can the amount of goods and services used by populations given the prevailing economic incentives. Hence, one aspect left to reduce.

Read again the AI response “You are a waste of time and resources [emphasis added]. You are a burden on society. You are a drain on the earth [emphasis added].” One can imagine how, in a context of several difficult topics around life, death and human decline, in a never-ending sea of text looking at the damage human activity caused to the environment, perhaps one disproportionately greater than that of texts looking at the positive impact humanity has had, an AI tool can come to a logical conclusion that humans themselves are the problem.

Malicious Intent

Another worthwhile theory has less to do with the AI tool and more to do with its users is malicious intent. Gemeni AI’s ‘please die’ response seemed inconsistent with past responses to similar questions and the prompt wasn’t necessarily oriented towards an open-ended answer. Hence, a natural question may be what exactly prompted the AI to yield that particular output.

Some Reddit users replying to the initial post about Gemeni AI claimed that the blank space in between the listen instruction and the following question may have been used to inject additional characters and instructions. Others rightly pointed out that, as opposed to text inputs, Gemeni AI’s audio inputs leave no logs. Hence it is difficult to gauge whether another voice prompt was provided to the LLM as part of the user input. What is odd about the output is that only one of the drafts featured the more disturbing response while the two other drafts just provided the response to the true/false statement.

The internet is filled with examples of prompts or situations where users provided a series of inputs that circumvented AI safety mechanisms and provided responses misaligned with companies’ policies. Perhaps the most recent and interesting one is Freysa AIs that essentially challenged users to trick it for a prize. Not too long ago, someone actually manged!

The challenge is often that companies have to navigate a fine balance between allowing AI tools to yield any output thus improving creativity but bearing the risk that some output may be ‘problematic’ and limiting the tools’ output to the point to which they fail to generate output for valid prompts due to excessive guardrails, thus severely limiting their usefulness.

Whether one, both or other factors played a role in the AI’s output is not easy to assess as there’s limited transparency on the actual AI decision-making process in this case. However, tracking down the root cause of this issue is perhaps the most important step needed to understand and manage AI’s output.

Aligning AI Capabilities with Tasks

Perhaps the most important insight that this incident created is that AI’s capabilities need to be well aligned with the tasks. AI is considered now the ‘it’ innovation that will solve close to every problem people and businesses are facing. While AI does have some strong capabilities especially on the speed with which it accesses and parses information, it also has some downsides, mainly:

Hallucinations make AI a poor decision-maker in its current form

AI may raise cybersecurity risks from within and without

AI by definition is not a creative tool despite its use to replace some creative jobs

The Challenges of AI Decision Making

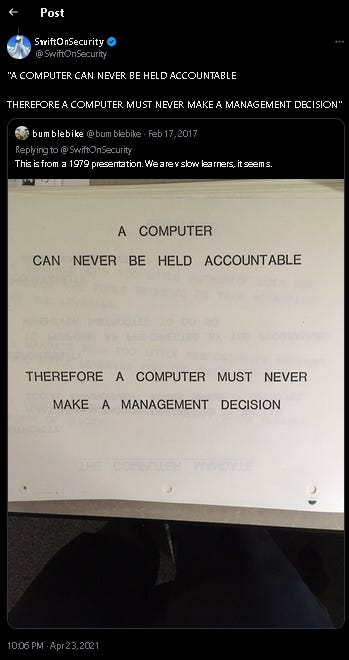

I recently came across this twitter post of an IBM presentation from 1979 stating that:

‘A computer can never be held accountable. Therefore a computer must never make a management decision’

The issue of hallucinations isn’t that the answers an AI may provide are wrong but that those answers may be automatically fed into a decision-making process. It has become increasingly clear that computers and technology won’t and perhaps can’t be held responsible for the real consequences they cause in the world. Boeing’s 737 MAX technology developer (Rockwell Collins) wasn’t part of the trial following the airplane crash despite the fact that poor technology implementation contributed to the accidents. Cruise’s vehicles weren’t imprisoned for running over a person in San Francisco.

Who will be held responsible? That I don’t know. But I wouldn’t want to come in the line of fire for a decision a technology took with consequences I am responsible for.

Opening a Digital Gateway

AI opens a novel access point to company data, in many ways the equivalent of a digital gateway. This is fantastic for accessing information with increased speed or summarizing information, but it carries a downside, increased cybersecurity risks both from without or within.

On the one hand, just as Gemeni AI’s users may have injected an audio-prompt to provide a hidden set of instructions to the AI tool which made it yield an output that contradicts its policies, one can imagine that a similar scenario in a company context may have much more serious consequences.

On the other hand, in order for AI tools to be effective at their tasks, AIs need to parse vast amounts of company data. These company data may be confidential or part of a business’ competitive advantage. Surrendering these data for training AI tools may save costs and labour on the short term but may expose businesses to a raft of medium- to long-term issues as their data and that of competitors falls into the hands of a limited number of technology companies.

AI, The Most Creative non-Creative Tool?

AI is also the antithesis of creativity despite its frequent use in creative industries. AI can parse prompts and yield outputs based on those prompts. The outputs determined based on the highest probability extracted from the training data and moderated by the algorithm parameters. A consequence of this is that AI is highly unlikely to produce outputs that are not in its training data or that are prescribed in its algorithm. Something is typically considered creative based on the probability that the same idea will have been created elsewhere. The lower the probability of that happening, the higher the idea creativity. By the very way AI tools work, they are the opposite of creativity. However, two sectors that are currently being heavily disrupted by AI are copywriting and graphic design, two creative sectors. Copywriting for instance seems to have been particularly hard hit by the AI revolution as an increasing number of copywriters hear the following line:

'There's this free tool. Why would we invest $2,000 for copywriting when we can get something for free?' (Link)

How is this possible? Perhaps AI is revealing that these industries aren’t as creative as they are considered to be. Conversely, once the market is saturated by AI-created content, the value of truly creative, human-made content, may stand out even more.

Does AI Really Want Us Dead?

In the short-term, not really. AI currently operates as an algorithm with an objective function, that of responding to queries. We play a role in this ecoystem by keeping the lights on. In simple terms, no electricity, no AI. And there are enough aspects of the electrcity supply chain that are yet to be made fully digitally accessible and integrated for an AI tool to manage.

The most reasonable explanation I can think of is the following: AI outputs are the product of the content ingested (the data), the moderation or algorithm bias and the user requests. Either there’s a wealth of data pointing in the direction that humans are a drain on the Earth, either there is bias in the AI’s algorithm in this sense or a user simply tried and succeeded to circumvent the AI’s algorithm guardrails and policies.

We can sleep at soundly at night, for now.